Gaston Bertels – ON4WF

The Moving Picture Experts Group (MPEG) is a working group of authorities that was formed in 1988 by ISO (International Organization for Standardization) and IEC (International Electrotechnical Commission) to set standards for audio and video compression and transmission.

First published in 1996, H.262 or MPEG-2 Part 2 is a video coding format developed and maintained jointly by ITU-T Video Coding Experts Group (VCEG) and ISO/IEC Moving Picture Experts Group (MPEG).

Digital Video Broadcasting (DVB) is a set of internationally open standards for digital television.

Digital Video Broadcasting – Satellite (DVB-S) is the DVB standard for Satellite Television and dates from 1995. In the USA and Canada, similar standards are developed by the Advanced Television Systems Committee (ATSC).

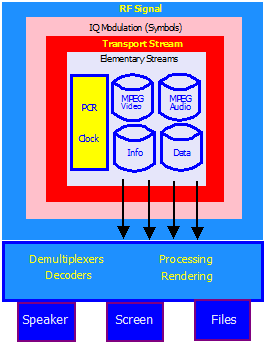

The principle of DVB is to transmit a stream of H.262 (or H.263) data, known as a Transport Stream (TS).

The Transport Stream is composed of sub-streams containing H.262 (MPEG-2) encoded information. This information can be digital video, digital audio, as well as data.

Each sub-stream is identified by a unique “packet identifier” or PID. These elementary streams are split into packets and become a packetized elementary stream or PES. Each PES is packetized again and the data are stored in transport packets. PES packets are multiplexed into a Transport Stream.

Each packet is identified by its PID and a time stamp.

The reason for packetization is that it allows for powerful correcting techniques, essential for efficient transmission.

MPEG defines a strict buffering model for its decoders. Therefore each elementary stream in the global transport stream is given a data rate ensuring that the receiver can decode the stream with no buffer overruns or underruns. Since the final transport stream will count much more video packets than audio packets, we cannot simply alternate packets from each stream. One audio packet per ten video packets will probably maintain an even balance.

In order to de-multiplex the transport stream, the receiver must know precisely what type of data is in each elementary stream. To satisfy this need, MPEG and DVB specify service information (SI) to be added during the multiplexing process. With this information and thanks to the PIDs, the receiver can decide where to store incoming packets in different parts of its software stack for decoding. Service Information is stored in database tables, packetized and broadcast as elementary streams.

The most common SI tables are:

- PAT Program Associate Table MPEG

- PMT Program Map Table MPEG

- CAT Conditional Access Table MPEG

- NIT Network Information Table DVB

- SDT Service Description Table DVB

- TDT Time and Date Table DVB

PAT, the Program Associate Table, describes which PID contains the Program Map Table for each service. Video, audio and data are “Services”.

PMT, the Program Map Table, describes all the streams in a service. It tells the receiver which stream contains the MPEG Program Clock Reference for the service.

CAT, the Conditional Access Table, describes the conditional access systems that are in the transport stream and provides information about how to decode them.

NIT, the Network Information Table, contains the name of the network and the network ID.

SDT, the Service Description Table, provides the name of the service, the service ID and its status.

TDT, the Time and Date Table, provides the current UTC time, a reference for the stream.

More information is available at http://www.interactivetvweb.org/tutorials/dtv_intro/dtv_intro

Note: The HamVideo transmitter signal does not contain tables. The needed information is provided by the receiving software written by Jean-Pierre Courjaud F6DZP. Commercial receivers, such as Set-Top Boxes, cannot decode HamVideo signals.

Receiving DVB takes the following steps:

- To receive and amplify the RF signal

- To demodulate the QPSK signal

- To recover the IQ symbols (error correction) and re-create the Transport Stream

- To recover the Elementary Streams (de-multiplexing)

- To decode, process and render the video, audio and data.